Hi all,

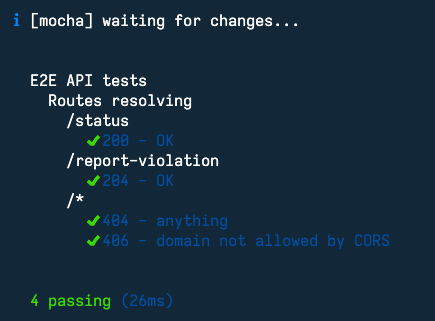

this week was writing tests for my backend and the CI test implementation.

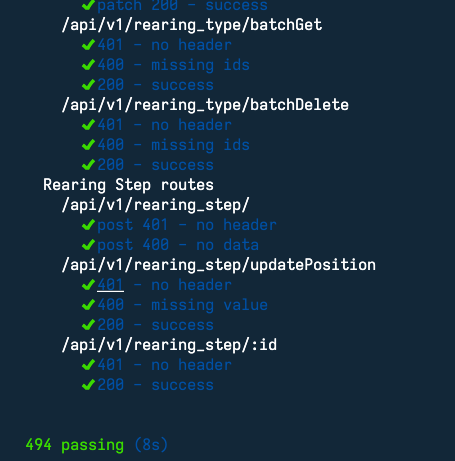

First of all, I’m really happy that I forced myself to write tests, already found a few bugs which I had surely missed before going live. The testing also seems rather fast, which means I can keep it running while development.

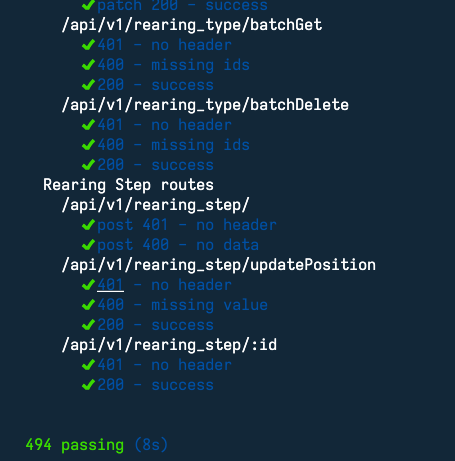

Currently I have written close to 500 test cases and some of them nested, which comes down on my local machine to ~8s.

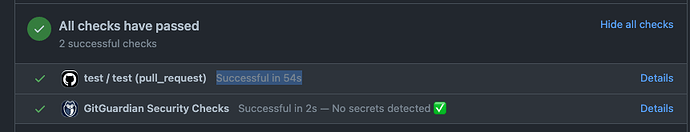

On GitHub for my CI implementation it boils down to ~1-2 minutes.

Pollution of test database

I did not know how to handle the continuous pollution of my test database, as the test are automatically run on change. First I tried to drop the database and create a new one, but this left me with some user permission problems. Next up I tried to rollback all migrations and then migrate back up which took quite a long time, as I have already written a lot of migration files.

My final solution was to a) migrate to latest database version b) truncate all tables (not needed on CI). b) was solved with some raw SQL queries, first I fetched the table names from the schema information and then had to remove the foreign key security and loop over all tables, here is the full code which is run before the tests:

before(async function () {

this.timeout(10000); // Standard time-out for mocha is 2s

console.log(' knex migrate latest ...');

await knexInstance.migrate.latest();

if (process.env.ENVIRONMENT !== 'ci') {

console.log(' knex truncate tables ...');

//knexInstance.migrate.rollback({ all: true })

await knexInstance.raw('SET FOREIGN_KEY_CHECKS = 0;');

const tables = await knexInstance

.table('information_schema.tables')

.select('table_name', 'table_schema', 'table_type')

.where('table_type', 'BASE TABLE')

.where('table_schema', knexConfig.connection.database);

for (t of tables) {

if (

!(

['KnexMigrations', 'KnexMigrations_lock'].includes(t.TABLE_NAME) ||

t.TABLE_NAME.includes('innodb')

)

)

await knexInstance.raw(`TRUNCATE ${t.TABLE_NAME};`);

}

await knexInstance.raw('SET FOREIGN_KEY_CHECKS = 1;');

}

global.app = require(process.cwd() + '/dist/api/app.bootstrap');

global.server = global.app.server;

})

Mocha peculiarities

Here are some cases which took me quite a while to figure out, with mocha.

done()

Normally you have to close your tests and before, after cases with done(), eg:

before((done) => {

....

done();

});

If you use promises you should not use the done() return.

before(async () => {

await Promise()

});

global

If you want to use variables over multiple testing files there is a global variable, eg for your test user login.

global.demoUser = {

email: `test@btree.at`,

password: 'test_btree',

name: 'Test Beekeeper',

lang: 'en',

newsletter: false,

source: '0',

};

Closing server

As last time mentioned I had to use nodewtf to find out why mocha was not auto closing. This time it was knex which did not close my connection, so you have to use knex.destroy().

after((done) => {

global.app.boot.stop();

global.app.dbServer.stop();

knexInstance.destroy();

done();

});

CI / GitHub Action

The goal was to automatically run the test if a pull on the main branch is happening. This one was again a little bit tricky as you cannot really test it on your local machine.

I did first play around how to create a database, the first idea was to use a service container (which is a Docker container). But after a while I figured out that on Linux there is actually SQL installed but not active and you need only to start it.

# Start SQL

sudo systemctl start mysql

# Create our testing database

mysql -e 'CREATE DATABASE ${{ env.DB_DATABASE }};' -u${{ env.DB_USER }} -p${{ env.DB_PASSWORD }}

Next up was again permission problems, as newer MySQL does not allow simple password access, which after some googling I could solve with some SQL commands.

# Change identifier method for our testing user

mysql -e "ALTER USER '${{ env.DB_USER }}'@'localhost' IDENTIFIED WITH mysql_native_password BY '${{ env.DB_PASSWORD }}';" -u${{ env.DB_USER }} -p${{ env.DB_PASSWORD }}

# Let MySQL know that privileges changed

mysql -e "flush privileges;" -u${{ env.DB_USER }} -p${{ env.DB_PASSWORD }}

The final working action can be found here: btree_server/test.yml at main · HannesOberreiter/btree_server · GitHub

Cheers

Hannes