Thanks … I will have a good read there (a superficial one already conjures up a few deja-vu feelings ;-) … especially about the 512 buffer size and only getting 64 good samples out of that. Before I was aware of that I spend many days trying to figure out why the spectrum had soo many high frequencies that folded back as artefacts into the spectral range of interest. This was because essentially I was sampling a block-wave with 64 good samples and 256 (left channel of 512 samples) minus 64 = 192 bogus ones. In the end I verified that the 64 samples were continuous with the 64 obtained from the next buffer read and took for granted that the 512 size doesn’t make sense to me … still, not understanding this is one of the red flags that come back up in my mind while I don’t fully trust the FFT.

No, not yet.

Also something worth a look from Fab Lab Bcn fame.

P.S.: OSBH also was kind of born there, at least what we learned from history.

Problem summary

From still looking at this from 10.000 ft, the discovery made by andrewjfox · GitHub sounds perfectly reasonable:

This may not be the issue, but I have found that if the buffer is not emptied (using i2s.read()) before the next cycle then the library can stop sampling, and the only way to get it going again is to restart the i2s (i2s.end → is2.begin). The I2S library uses a double buffer so while one buffer is available to read, the other is being filled by the DMA. If the buffer is not available then the process stops.

If you’re doing lots of processing on the buffered data, before going back to read next buffer, the delay between emptying the buffer might be too long.

– https://github.com/arduino/ArduinoCore-samd/issues/294#issuecomment-375116660

Advice

So, assuming this might be the reason, we should either reduce the processing time, either by having a look at the FFT processing routine or by checking back with possible negative influences coming from the LMIC scheduler. If we are sure everything is absolutely correct with this and we just can’t make the FFT more efficient, we should look into the concept of bottom-half processing.

This concept is used by grown-up operating systems to counter

the main problems with interrupt handling when performing longish tasks within a handler. […]

[The solution is to split] the interrupt handler into two halves: The so-called top half is the routine that actually responds to the interrupt—the one you register with request_irq. The bottom half is a routine that is scheduled by the top half to be executed later, at a safer time.

There’s a comprehensive but well-written introduction into the topic at Tasklets and Bottom-Half Processing - Linux Device Drivers, Second Edition [Book] - enjoy reading!

If there are no general objections from your side and if we can’t solve the problem by some other reasonably satisfying workaround, we might check whether we might be able to apply this concept onto primitives provided by ArduinoCore-samd and/or the LMIC layer.

Disclaimer

Please recognize I’m writing this solely on the basis of random guesses after reading this small amount of resources circling around the possible issue with reading from I2S we listed above. Nevertheless, please let me know whether this resonates with you.

Magic … this already shows there is an issue: it consistently stalls after writing the RMS value of the second time to serial. I.e. when I2S.end() is called. I will shuffle around with I2S.begin() and end() to make sure there is no computation done in between. More later.

I have used this FFT testing code (without FFT / TTN):

Hi Clemens, how much program space (%) does this use? … I think I looked at this as well, but there was hardly anything left if used in conjunction with TTN.

Clemens, do you have the hardware still available? I would be curious if you could reproduce the observation that I2S.end() stalls. I looked back at all the things I have done so far to scrutinize microphone output and see that in those tests I put begin()s I2S always in the setup() function. Only when combining it with LMIC, I started using I2S.end(). And only with this microphone (ICS43432) I run into this issue.

Hi Andreas, I am not yet convinced that this is the same issue, but that could be because I don’t see deep enough in (for me) muddy water.

By collecting data like this:

for (int j=0; j<DATA_SIZE/64; j++) get_audiosample(&data[j*64]);

And only doing processing on this sample later, I thought I escaped from the issue that there would be too much time between subsequent calls to onI2SReceive(). My reason for doing this is that I wanted the complete sample to be contiguous (and thereby not to introduce weird frequencies). Then I go on to proceed with lengthy stuff, such as the FFT analysis. And afterwards I collect a new sample via the above line of code.

This loop of collecting and doing an FFT is repeated a number of times. If the issue would have been the same as described in the post (i.e. not emptying a buffer in time), I would have expected for it to arise within that loop. However it only does upon calling I2S.end().

I tried to insert a bunch of Serial.println() statements in that function in i2s.cpp and one by one removing the last one. With the function full of println() statements, it gets through. At some point, after removing a few, it gets caught in the following line of code inside i2s.cpp:

i2sd.disableSerializer(_deviceIndex);

i2sd.disableClockUnit(_deviceIndex);

Which both rely on getting out of a loop in SAMD21_I2Sdevice.h:

while(i2s.SYNCBUSY.bit.SEREN0);

… I have no clue what that bit does and who/what sets it.

Then again, I know that this kind of debugging is risky, as it introduces different timings.

A completely different thought just occurred to me: with the microphone in the beehive, I use a low sampling frequency (3125 Hz) and a relatively small DATASIZE=256 (because that gives me FFT bins of ~12 Hz). Since the ICS43432 doesn’t tollerate such low frequencies, I had to up it to something above 7.19 kHz and I picked 12500 Hz together with DATASIZE=1024 in order to get similarly spaced FFT bins. Could there be an issue with so much data being buffered?

Well. Really appreciating you to look into this with me. DIscussing gives new insights and helps to get my thoughts clear in order to phrase things (do I am not sure this post is the best example of that). I feel we made some progress already by reproducing this without having LMIC involved … BTW, I have reduced the code in github somewhat further and it still stalls.

I fear my Feather M0 RFM95 is currently in a box on Andreas’ desk – left from the last conference. ;-)

I compiled the sketch with a MKR1000 as board – just for testing and got this

Build-Optionen wurden verändert, alles wird neu kompiliert

Der Sketch verwendet 220912 Bytes (84%) des Programmspeicherplatzes. Das > Maximum sind 262144 Bytes.

Thanks @Clemens, that is I think very similar to the number I got on the Feather M0. The sketch that lives in hardware in my beehive (I2S, FFT and LMIC) does the following

Sketch uses 72072 bytes (27%) of program storage space. Maximum is 262144 bytes.

But … if it doesn’t work reliably with slightly different hardware, it is of no use, of course.

Hi Wouter, to be honest I never checked the memory consumption of the M0 because I did not come to any limitation till now. But I was surprised to see such a high load. I produced a lot of bins so I thought this is the reason for the high consumption. But I changed some variables, see First Steps (digital) Sound Recording - #5 by clemens like sampleRate, fftSize, bitsPerSample but I got still the same 84%.

Seems the Arduino Uno has some other compiling options, for the default blink sketch you get

Der Sketch verwendet 930 Bytes (2%) des Programmspeicherplatzes. Das Maximum sind 32256 Bytes.

Globale Variablen verwenden 9 Bytes (0%) des dynamischen Speichers, 2039 Bytes für lokale Variablen verbleiben. Das Maximum sind 2048 Bytes.

So there is a differentiation between program memory and dynamically allocated memory.

Nevertheless I had a look at the spec of the ATSAMD21G18, the MC on the Feather M0:

- 256KB in-system self-programmable Flash

- 32KB SRAM Memory

I thought – compared with the “normal Uno” – this is much more then we ever would need. But it seems I’m wrong in case it is a memory problem you are running in now.

Compared with this, the LoPy is outstanding:

- RAM: 4MB

- External flash: 8MB

So I’m asking myself if this could be a reason also to move to the ESP32? The only drawback I see is that the Arduino FFT lib is not available for the ESP as far as I know, but there are other FFT libs! And in case we have WiFi nearby we could record and transmit with a higher bin rate also.

So far I haven’t run into a memory problem: all my sketches with I2S, FFT and LMIC are below 30% program space usage. And it seems that it should port pretty well to an M4 in the future if need be.

The Arduino Sound library just takes a lot of space, which is why I avoided it and used the Arduino I2S in conjunction with Adafruit Zero FFT.

slightly OT as this building block is a commercial product, but they also feature a Cortex M0 and a ICS43432.:

- Measures sound pressure level in dB(A/B/C/D/Z) and ITU-R 468

- Measures spectrum with up to 512 bins and up to 80 samples per second

- Frequency range 40Hz to 40960Hz

- Noise floor 30dB, maximum 120dB

documentation and examples for many programming languages:

https://www.tinkerforge.com/en/doc/Hardware/Bricklets/Sound_Pressure_Level.html

firmware, design files, code:

New informatiom for me, good to know! I wondered how you got such a low memory footprint!

Announced frequency range down to 40 Hz is interesting while the ICS43432 is specified from 50 Hz on.

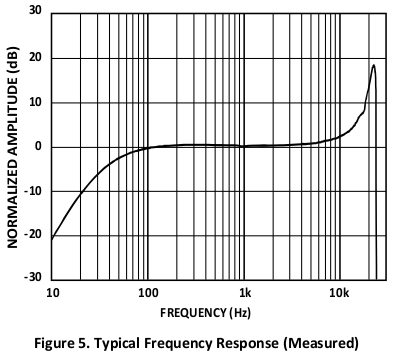

Yes and No: 50 Hz here is only the -3 dB point on frequency response, the unit actually was measured with down to 10 Hz (@ 20 dB attenuation over response @1kHz) :

But: bins over, say, 22 kHz are unusable with this mic. So the 40960 Hz are likely only zipper noise! ;)

wow, there’s a lot going on here :) cool!

I am afraid it would take some time for me to get started with the code.

I am not sure if this helps, but maybe we could decide for one or several audio test-snippets and both run our code on it? Then we could compare the FFT outcomes?

I can only imagine to play the file with a PC / mobile phone and to record it with the mic of the hardware so we would have a strong bias (speaker, background noise, .mic). Or we would have to connect the input channel with a I2S audio player to get comparable outputs.